My favorite log aggregation service

Previously, I wrote about my favorite home lab metrics DB. This time I wan to talk about another component of monitoring stack - about log aggregation.

Spoiler: this post is going to be about Grafana Loki and how the promise of cost effectiveness and ease of operation works really well in the unique environment of home lab and small self-hosted networks.

The core values of Loki project are communicated very clearly - right on the Loki webpage, you can read that Loki..

..is designed to be very cost effective and easy to operate.

First of all, let's think about this from home lab perspective..

Let's talk about cost🔗

This is going to be very subjective topic, however I dare to say, that in typical home lab / self-hosted scenario there actually isn't that many logs to deal with.

If one looks at most popular software that people run in their homes, it's stuff like Vaultwarden, Jellyfin, Nextcloud or some other similar service and (again talking in general) these services have only handful of users.

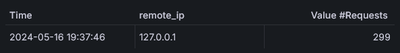

In my experience unless the services are directly exposed to the Internet weather, there is mostly silence. To provide more specific example, looking week back at one such service, there's about three hundred logs per day:

Clearly a CPU or disk usage to collect these is not really a concern. So why even talk about cost? And why it is so common to see home labs that have no centralized log solution?

The ELK in the room🔗

First of all, I don't want to single out the ELK stack here. This is just example I'm somewhat familiar with that's only used here to drive a point. ELK still can be the right tool for the right job in many cases. Here I'm approaching it from the point of relatively small self-hosted/home lab environment.

Elasticsearch can be a bit heavy, sure. There might be some tuning involved if one tries to scale it to the environment where there might be limited resources. But that is usually not the most pressing cost of operating the stack. The real cost to pay shows later.

Log in(di)gestion🔗

Elasticsearch indexes every log before storing it. To do this job well, it expects the log to be properly parsed. This is where Logstash (or some other tool) comes in pre-chewing the raw logs. The better the pre-processing job is, the better will be the search experience. Which means that there's a bit of upfront work to start collecting logs in meaningful way:

- Parsing out data from the raw log. Whether it's JSON, logfmt or some other format, breaking it into individual fields improves later ability to search for specific value.

- This often needs to be done in nested fashion - some JSON log might contain a field that is also formatted in specific way that might be worth breaking into separate pieces of data.

- Extra field-level processing beyond simple parsing. Some fields might require mangling - your request size might need to be parsed from

20kBstring into20 000bytes field, your durations might be better expressed in common units as well.

This upfront processing helps to improve the usefulness of the stored data, but it's not without side effects:

- Maintenance burden even beyond initial setup:

- Make sure fields across the logs in the same index don't have conflicting field types. This is often problem even within single program as log output does not necessarily have well defined schema. Should we store

300 Multiple choicesHTTP response code as a number or a text? - Handle special cases where some logs aren't exactly following correct format specification.

- All of the above when there's change of log format after application upgrade. It does not help that changing log format is rarely considered a breaking change.

- Make sure fields across the logs in the same index don't have conflicting field types. This is often problem even within single program as log output does not necessarily have well defined schema. Should we store

- Loss of information:

- Unless you're willing to effectively double the size of the stored logs and attach original raw message as extra field, the information about what the original log line looked like is lost.

- Some logs don't fit the common schema or are entirely incorrectly parsed. This makes them less discoverable or completely lost. Often times these are exactly the logs you don't want to miss - like an unexpected error in service that program didn't handle correctly and as a result didn't log out in usual format.

In these circumstances hesitation to add more log sources is definitely understandable. In the realm of small home lab, where storage might be cheap, but time certainly isn't, this is exactly the real cost of centralized logging. It is what moves the logging work from ✔️DONE to the ⏱️TODO column.

This is not actually a problem with Elasticsearch. At its core it's a problem with cost and value. Elasticsearch will process and store each line as if it is the most valuable document there ever was. However in real life not all logs are equally important and frankly most logs are useless until the day you really need them. (for most this day will never come) Somewhere along this path you'll almost inevitably end up thinking..

I essentially need to just store logs in shared directory and then sometimes

grepsome of them. Surely there must be an easier way to do this!

Before you set up NFS share and begin logging to it (I wish I've never seen this in production, but life is sometimes cruel), let me introduce you to..

Loki🔗

The idea of log aggregation in Loki is kind of flipped on its head. When ingesting logs Loki does very little. It only indexes the metadata and you're encouraged to be very conservative with those. The labels should be used sparingly - the general recommendation is to use just enough to identify source of the logs. It leaves the hard work for later.

This reduces the complexity of log ingestion and as a result also saves the cost of:

- Hardware: There's (almost) no index, so you don't have to store it or put load on CPU to calculate it in the first place. If you process a lot of logs, Loki can ingest more data with fewer resources. It will also require less storage. But following our thinking above with the assumption that there isn't actually a lot to process, the second point is more important:

- Complexity: Because there is very little processing done by Loki on ingestion, there is very low barrier of entry for adding logs. The system allows for "store now, think about structure later" mindset. There's very little resistance to add stuff to be collected. This is where Loki really delivers on its cost efficiency promise.

How does it work in practice? Well, let me show you.

Installation🔗

I don't want to delve into this too much. I just wanted to point out how beneficial value alignment is between product and its end user. Looking at architecture docs, there is a lot going on. This makes sense, because Grafana (the company) needs to be able to operate the service at scale. But because being cost effective and easy to operate are project core values, we're also offered single docker image or even just a single binary to deploy. Perfect for what we need.

The service is exposed via pretty simple HTTP API, which means it's also easy to hide behind SSL reverse proxy - chances are you already have one deployed. Place the service behind BasicAuth and we have some form of access control in place. Easy!

I'm sure you can figure out the rest from here. Let's start using the service.

Shipping logs to Loki🔗

There are many options out there. But I really like what Vector by Datadog has to offer here. One benefit is that you can get by with pretty minimal configuration. Effectively you really only need to configure the sink and some input.

So something like this:

sources:

journald_logs:

type: journald

sinks:

loki:

type: loki

endpoint: https://loki.mylan

inputs:

- journald_logs

With those 9 lines of configuration, you're shipping all of the service logs to Loki. If you want to get more advanced than that, you can. Vector provides amazing Vector Remap Language for some very advanced log transformation. But my point here is that you don't have to. Need to add some log files? Easy:

sources:

journald_logs:

type: journald

# Add source here:

my_app_logs:

type: file

include:

- /all/the/logs/here/*.log

- /also/this/log/here.log

sinks:

loki:

type: loki

endpoint: https://loki.mylan

inputs:

- journald_logs

- my_app_logs # ..and here

The magic here is that this just works. Vector ships minimal set of labels and Loki will happily store whatever you send. You really only have to care enough to make your service log to stdout or to point Vector to the log files. There is no schema, no index to manage no log formats to parse.

Are you going to ever look at those logs? Maybe not, but you spent only couple of seconds adding them to the system. There's no reason to not send those logs. You're better of casting wide net and collect more logs in broad patterns rather than painstakingly select individual items to collect.

Searching logs with Loki🔗

One day you realize that you actually want to search the logs. Well good news, the logs have been collected, because it was so easy!

But how do we get the data out if we just piled random sources into Loki? Isn't that going to be a pain? After all there was no indexing.. Well fear not, because that's where LogQL - log query language steps in to save the day.

Let's work with practical example. Gitlab container logs. If you've seen those before, you're already concerned a bit. It's a bunch of services all in one container, each has its own log format - some is JSON, some is not, some is multi-line. Oh my. 😰

Let's start building that query. Select all of the container logs:

{container="gitlab"}

This gives us the garbage we described above. But I'm actually interested in specific log line in JSON format, so parse all as JSON and drop anything that does not parse:

{container="gitlab"}

+ | json | __error__=""

We could be more specific only filtering JSON parsing errors:

__error__ != "JSONParserErr"

Nice! Getting somewhere. We're interested in Gitlab runner calls fetching new jobs to run:

{container="gitlab"}

| json | __error__=""

+ | uri="/api/v4/jobs/request"

The above would be pretty typical Loki query. Going further, the example is intentionally a bit more involved to show some of the features. Keep that in mind. You can do pretty complicated things, but you can get quite far with simple query.

These logs have user_agent field (thanks to parsing the JSON, Loki itself wasn't aware of that field before we asked for it) with following format:

gitlab-runner 17.0.0 (17-0-stable; go1.21.9; linux/amd64)

Let's say we're only interested in API calls from any gitlab-runner agent with major version 16.2.X or higher. Let's parse the contents of the user_agent field:

{container="gitlab"}

| json | __error__=""

| uri="/api/v4/jobs/request"

+ | line_format "{{ .user_agent }}"

+ | pattern "gitlab-runner <major>.<minor>.<patch> (<_>)"

..and only look for specific version:

{container="gitlab"}

| json | __error__=""

| uri="/api/v4/jobs/request"

| line_format "{{ .user_agent }}"

| pattern "gitlab-runner <major>.<minor>.<patch> (<_>)"

+ | major >= 17 or ( major = 16 and minor >= 2)

Note that Loki automatically converts the parsed version labels into numbers because we asked for numerical comparison. We didn't have to tell Loki during log collection to parse the numeric values, yet we can still use them as such. Remember the dilemma we had when processing 300 Multiple choices HTTP response code? Loki lets us choose at query time whether we want to work with numbers or strings.

Our query could encounter some log lines that don't have valid version in the

user_agentfield. We can decide to drop such logs (just like we did for JSON parsing above) or do something else instead.Loki has pretty reasonable behavior here. If you didn't handle the error, for log queries it will simply not filter out the failing log line, but for metric queries (see bellow) it will error out. This makes sure you see all log lines when searching text, but it also prevents you from doing math on top of unexpected data.

Finally wrap the results in some metric query to get the IP address from where the requests arrived in last 15 minutes:

+sum by(remote_ip) (

+ count_over_time(

{container="gitlab"}

| json | __error__=""

| uri="/api/v4/jobs/request"

| line_format "{{ .user_agent }}"

| pattern "gitlab-runner <major>.<minor>.<patch> (<_>)"

| major >= 17 or ( major = 16 and minor >= 2)

+ [15m])

+)

As it turns out, the requests are all coming from inside the house server 😱💀:

So to sum up what we just did:

- Selected logs from specific container

- Picked only logs that parse as valid JSON

- Using the parsed fields, picked just logs referencing specific API endpoint

- Parsed one of the previously parsed fields (so nested parsing) to pull out version information and filter on that

- Count occurrences of all logs matching all of the above criteria into buckets defined by field value we parsed in our query

What's really neat about this is that we did no prior work to allow such query. What's more, if the specific log storage solution actually required pre-parsing the logs and extracting field data, chances that our specific query would be straightforward are pretty slim.

3 years Loki retrospective🔗

At this stage, I've been happily using Loki for years. It really does deliver on the promise of operational simplicity and cost effectiveness. I can't recall any upgrade that would be problematic, the service uses almost no CPU and it sits at about 100MB of RAM pretty much all the time. This of course reflects also how I use the service, but the point is that Loki can be used comfortably in that kind of environment.

At the same time it does enable me to search all of the logs and slice the data in any way I find useful. Sometimes I reach for Loki instead of using journalctl on the host just to use the filtering and parsing capabilities.

But what's most important, adding new logs is extremely easy. Which means that I do have the logs when I need them.

This article is part of Linux category. Last 17 articles in the category:

- On dishwashers and Nix

- Look ma, no Hass!

- Sudo just a bit

- NixOS on Contabo

- Lenovo P500 remote management via serial port

- HTTP 500 - Very internal server error

- My favorite home lab metrics service

- LXC and the mystery of lost memory

- Docker in WSL2 (the right way ++)

You can also see all articles in Linux category or subscribe to the RSS feed for this category.